Introduction to UMID for Broadcasters and TV Production Companies

by Mark Andrews, April 2017.

Despite being available as an SMPTE Standard since 2000, take up of UMID (Unique Material Identifier) has been slow within the TV production and broadcast industry. But with file based workflows becoming the norm, coupled with the increase deployment of cloud operations and the sharing of Media Asset Management systems to provide federated searches across A/V organisations, then the benefits of having UMID as part of a metadata strategy will start to become much more prevalent.

In the past video clips have been identified by a number of unstructured methods such as using the tape number and time code. With the move to file based workflows, organisations were quick to adopt MXF file formats for interoperability. But again, organisations come up with their own method of uniquely identifying video clips. The issue becomes compounded when these clips are edited then there is no structure to how these are further identified with their own unique identifier once they are modified. With more broadcasters becoming global, and the willingness to monetise and share content, the ability to have a standard approach to uniquely identify video and audio assets across the world is becoming ever more important.

Stand-alone files such as an MXF, rely on a media asset or production management system (MAM/PAM) to provide a unique identifier, which in turn has its own management issues. Federated searches or migration of content between MAM systems becomes difficult. Also if a file becomes orphaned, how does the system cope with an identifier if it is subsequently re-ingested – any pertaining metadata in the MAM is lost as the original link is now missing. And there is always the issue if a standard is not implemented during metadata creation, then there is the possibility of duplicated unique identifiers.

Another example of the benefit of UMID is highlighted in the following example.

In the East Japan Great earthquake in 2011, many HDCAM tapes were delivered to the news production studio all at once and were piled up on a desk. Because of the hectic situation and not-so-easy preview of the material on a tape, it was only a small element of the clips that they gathered that were used for the news on air. As a result, viewers were forced to watch the same scene repeatedly.

In addition, the volume of materials they had acquired during the period was so huge, years have been required for them to be archived in an appropriate fashion such as a "ready for search". This problem would have been avoided if all the materials were acquired as MXF files with an automated UMID.

By utilising a UMID-based material identification scheme, they can be easily integrated to form a (virtual) single material management system. Second, if the UMID Source Pack including the camera shooting direction was also utilised, an initial classification of the acquired materials can be **automatically** done according to the shooting time ("When"), shooting position (and the camera direction) ("Where") and/or a camera person ("Who"). This would have greatly assisted in the original classification of the media making it immediately available to ingest, search and utilise, without a major metadata classification exercise.

UMID has been developed and industry standardised by SMPTE (Society of Motion Picture & Television Engineers) with the latest standard from 2011, which allows for a unique global identifier to be created at the same point that the video or audio clip is created.

It was Sony HDCAM series (2001 version) which initially adopted the UMID. There are two forms of UMID – the basic (32 bytes) and the extended (64bytes). In the HDCAM, the Extended UMID was employed, which is created by using an optional GPS unit attached to an HDCAM camcorder and recorded on an HDCAM tape (beside to a corresponding frame data) during shooting.

Another major benefit of the UMID standard is that it is embedded as part of the MXF file to globally uniquely identify itself. This means that files can be used in any production workflow without always having reliance on a proprietary MAM system to create a look-up identifier which is specific only to that MAM system. Also at any point during the production workflow from ingest to playout, to archive, the unique identifier will always remain constant. The advantages of this are numerous, in that they simplify the management process and enable much more interoperability over many disparate MAM and PAM systems from different organisations, without fear of duplication or deletion of the unique identifier. When files are modified, the standard requests for the recreation of a new UMID to distinguish the modified one from the original. The original UMID is preserved.

Another part of the standard is the UMID “resolution protocol”. This is a standard method to convert a given UMID into its corresponding URL of an MXF file, uniquely identified by the UMID. Because the UMID itself contains no information on where a target AV material as an MXF file exists, there is no way for an application to access the MXF file even when its UMID is made known to the application. By using the UMID resolution protocol, the application can obtain the URL of an MXF file identified by the UMID given to it, and access the MXF file by FTP or HTTP.

The UMID resolution protocol is also under the new standardization in SMPTE.

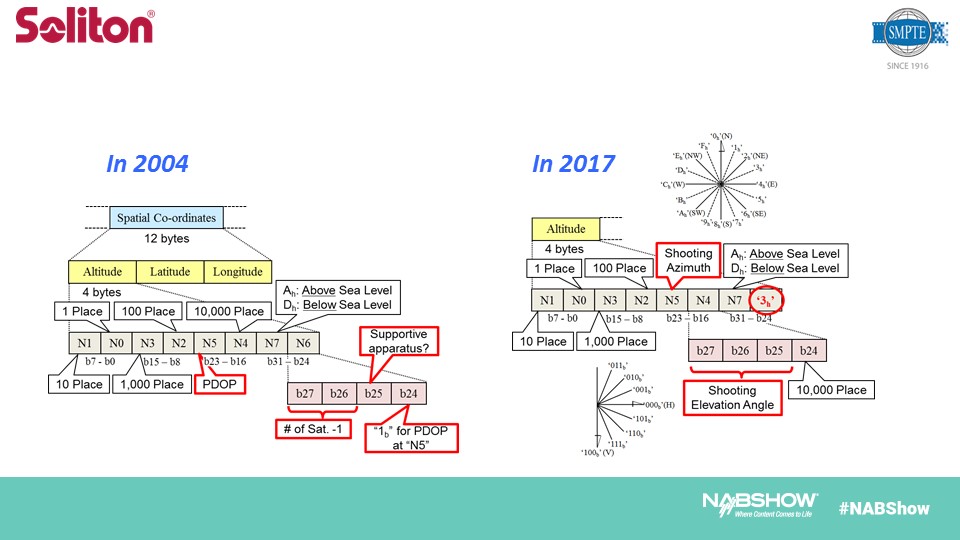

In 2017 at NAB in Las Vegas, Soliton’s Yoshi Shibata, the Chair of SMPTE TC-30MR Study Group on UMID Applications, will present some of the latest thinking on UMID. Since 2004, part of the identifier was made to contain such as the number of GPS satellites and the PDOP (Position Dilution Of Precision). In the new proposed standard by the Study Group, this will be adapted to include the direction of shooting of the camera, including longitude, latitude and altitude

Soliton Systems K. K. is also researching how UMID and other metadata can be embedded into an encoded video stream when used, for example, in a live news gathering environment, or a live sport production, that allows for H.265 streaming across the cellular 3G/4G network.

Yoshi will also discuss at the conference the UMID in a context of the Video over Internet Protocol (VoIP), which is currently one of the hottest topics in the Media & Entertainment industry, as well as H.265/HEVC mapping to MXF.

Yoshi’s presentation entitled “To Maximize Interoperability in Mobile News Gathering” will be presented on Monday 24 April 2017, from 11:00 to 11:30 at N258, Las Vegas Convention Centre.

This will be part of the Broadcast Engineering and Information Technology (BEIT) Conference.

We look forward to seeing you in Las Vegas!